In January 2018, Microsoft released an advisory and security updates for a new class of hardware vulnerabilities involving speculative execution side channels (known as Spectre and Meltdown). In this blog post, we will provide a technical analysis of a new speculative execution side channel vulnerability known as L1 Terminal Fault (L1TF) which has been assigned CVE-2018-3615 (for SGX), CVE-2018-3620 (for operating systems and SMM), and CVE-2018-3646 (for virtualization). This vulnerability affects Intel Core processors and Intel Xeon processors.

This post is primarily geared toward security researchers and engineers who are interested in a technical analysis of L1TF and the mitigations that are relevant to it. If you are interested in more general guidance, please refer to Microsoft’s security advisory for L1TF.

Please note that the information in this post is current as of the date of this post.

L1 Terminal Fault (L1TF) overview

We previously defined four categories of speculation primitives that can be used to create the conditions for speculative execution side channels. Each category provides a fundamental method for entering speculative execution along a non-architectural path, specifically: conditional branch misprediction , indirect branch misprediction , exception delivery or deferral , and memory access misprediction. L1TF belongs to the exception delivery or deferral category of speculation primitives (along with Meltdown and Lazy FP State Restore) as it deals with speculative (or out-of-order) execution related to logic that generates an architectural exception. In this post, we’ll provide a general summary of L1TF. For a more in-depth analysis, please refer to the advisory and whitepaper that Intel has published for this vulnerability.

L1TF arises due to a CPU optimization related to the handling of address translations when performing a page table walk. When translating a linear address, the CPU may encounter a terminal page fault which occurs when the paging structure entry for a virtual address is not present (Present bit is 0) or otherwise invalid. This will result in an exception, such as a page fault, or TSX transaction abort along the architectural path. However, before either of these occur, a CPU that is vulnerable to L1TF may initiate a read from the L1 data cache for the linear address being translated. For this speculative-only read, the page frame bits of the terminal (not present) page table entry are treated as a system physical address, even for guest page table entries. If the cache line for the physical address is present in the L1 data cache, then the data for that line may be forwarded on to dependent operations that may execute speculatively before retirement of the instruction that led to the terminal page fault. The behavior related to L1TF can occur for page table walks involving both conventional and extended page tables (the latter of which is used for virtualization).

To illustrate how this might occur, it may help to consider the following simplified example. In this example, an attacker-controlled virtual machine (VM) has constructed a page table hierarchy within the VM with the goal of reading a desired system (host) physical address. The following diagram provides an example hierarchy for the virtual address 0x12345000 where the terminal page table entry is not present but contains a page frame of 0x9a0 as shown below:

After setting up this hierarchy, the VM could then attempt to read from system physical addresses within [0x9a0000 , 0x9a1000 ) through an instruction sequence such as the following:

01: 4C0FB600 movzx r8,byte [rax] ; rax = 0x12345040

02: 49C1E00C shl r8,byte 0xc

03: 428B0402 mov eax,[rdx+r8] ; rdx = address of signal array

By executing these instructions within a TSX transaction or by handling the architectural page fault, the VM could attempt to induce a speculative load from the L1 data cache line associated with the system physical address 0x9a0040 (if present in the L1) and have the first byte of that cache line forwarded to an out-of-order load that uses this byte as an offset into a signal array. This would create the conditions for observing the byte value using a disclosure primitive such as FLUSH+RELOAD, thereby leading to the disclosure of information across a security boundary in the case where this system physical address has not been allocated to the VM.

While the scenario described above illustrates how L1TF can apply to inferring physical memory across a virtual machine boundary (where the VM has full control of the guest page tables), it is also possible for L1TF to be exploited in other scenarios. For example, a user mode application could attempt to use L1TF to read from physical addresses referred to by not present terminal page table entries within their own address space. In practice, it is common for operating systems to make use of the software bits in the not present page table entry format for storing metadata which could equate to valid physical page frames. This could allow a process to read physical memory not assigned to the process (or VM, in a virtualized scenario) or that is not intended to be accessible within the process (e.g. PAGE_NOACCESS memory on Windows).

Mitigations for L1 Terminal Fault (L1TF)

There are multiple mitigations for L1TF and they vary based on the attack category being mitigated. To illustrate this, we’ll describe the software security models that are at risk for L1TF and the specific tactics that can be employed to mitigate it. We’ll reuse the mitigation taxonomy from our previous post on mitigating speculative execution side channels for this. In many cases, the mitigations described in this section need to be combined in order to provide a broad defense for L1TF.

Relevance to software security models

The following table summarizes the potential relevance of L1TF to various intra-device attack scenarios that software security models are typically concerned with. Unlike Meltdown (CVE-2017-5754) which only affected the kernel-to-user scenario, L1TF is applicable to all intra-device attack scenarios as indicated by the orange cells (gray cells would have indicated not applicable). This is because L1TF can potentially provide the ability to read arbitrary system physical memory.

| Attack Category | Attack Scenario | L1TF |

|---|---|---|

| Inter-VM | Hypervisor-to-guest | CVE-2018-3646 |

| Host-to-guest | CVE-2018-3646 | |

| Guest-to-guest | CVE-2018-3646 | |

| Intra-OS | Kernel-to-user | CVE-2018-3620 |

| Process-to-process | CVE-2018-3620 | |

| Intra-process | CVE-2018-3620 | |

| Enclave | Enclave-to-any | CVE-2018-3615 |

| VSM-to-any | CVE-2018-3646 |

Preventing speculation techniques involving L1TF

As we’ve noted in the past, one of the best ways to mitigate a vulnerability is by addressing the issue as close to the root cause as possible. In the case of L1TF, there are multiple mitigations that can be used to prevent speculation techniques involving L1TF.

Safe page frame bits in not present page table entries

One of the requirements for an attack involving L1TF is that the page frame bits of a terminal page table entry must refer to a valid physical page that contains sensitive data from another security domain. This means a compliant hypervisor and operating system kernel can mitigate certain attack scenarios for L1TF by ensuring that either 1) the physical page referred to by the page frame bits of not present page table entries always contain benign data and/or 2) a high order bit is set in the page frame bits that does not correspond to accessible physical memory. In the case of #2, the Windows kernel will use a bit that is less than the implemented physical address bits supported by a given processor in order to avoid physical address truncation (e.g. dropping the high order bit).

Beginning with the August, 2018 Windows security updates, all supported versions of the Windows kernel and the Hyper-V hypervisor ensure that #1 and #2 are automatically enforced on hardware that is vulnerable to L1TF. This is enforced both for conventional page table entries and extended page table entries that are not present. On Windows Server, this mitigation is disabled by default and must be enabled as described in our published guidance for Windows Server.

To illustrate how this works, consider the following example of a user mode virtual address that is not accessible and therefore has a not present PTE. In this example, the page frame bits still refer to what could be interpreted as a valid physical address in conjunction with L1TF:

26: kd> !pte 0x00000281`d84c0000

… PTE at FFFFB30140EC2600

… contains 0000000356CDEB00

… not valid

… Transition: <span style="background-color: #00ffff">356cde</span>

… Protect: 18 - No Access

26: kd> dt nt!HARDWARE_PTE FFFFB30140EC2600

+0x000 Valid : 0y0

+0x000 Write : 0y0

+0x000 Owner : 0y0

+0x000 WriteThrough : 0y0

+0x000 CacheDisable : 0y0

+0x000 Accessed : 0y0

+0x000 Dirty : 0y0

+0x000 LargePage : 0y0

+0x000 Global : 0y1

+0x000 CopyOnWrite : 0y1

+0x000 Prototype : 0y0

+0x000 reserved0 : 0y1

+0x000 PageFrameNumber : 0y000000000000001101010110110011011110 (<span style="background-color: #00ffff">0x356cde</span>)

+0x000 reserved1 : 0y0000

+0x000 SoftwareWsIndex : 0y00000000000 (0)

+0x000 NoExecute : 0y0

With the August, 2018 Windows security updates applied, it’s possible to observe the behavior of setting a high order bit in the not present page table entry that refers to physical memory that is either inaccessible or guaranteed to be benign (in this case bit 45). Since this does not correspond to an accessible physical address, any attempt to read from it using L1TF will fail.

17: kd> !pte 0x00000196`04840000

… PTE at FFFF8000CB024200

… contains 0000<span style="background-color: #00ff00">2</span>00129CB2B00

… not valid

… Transition: <span style="background-color: #00ff00">2</span>00129cb2

… Protect: 18 - No Access

17: kd> dt nt!HARDWARE_PTE FFFF8000CB024200

+0x000 Valid : 0y0

+0x000 Write : 0y0

+0x000 Owner : 0y0

+0x000 WriteThrough : 0y0

+0x000 CacheDisable : 0y0

+0x000 Accessed : 0y0

+0x000 Dirty : 0y0

+0x000 LargePage : 0y0

+0x000 Global : 0y1

+0x000 CopyOnWrite : 0y1

+0x000 Prototype : 0y0

+0x000 reserved0 : 0y1

+0x000 PageFrameNumber : 0y00<span style="background-color: #00ff00">1</span>000000000000100101001110010110010 (0x<span style="background-color: #00ff00">2</span>00129cb2)

+0x000 reserved1 : 0y0000

+0x000 SoftwareWsIndex : 0y00000000000 (0)

+0x000 NoExecute : 0y0

In order to provide a portable method of allowing VMs to determine the implemented physical address bits supported on a system, the Hyper-V hypervisor Top-Level Functional Specification (TLFS) has been revised with a defined interface that can be used by a VM to query this information. This facilitates safe migration of virtual machines within a migration pool.

Flush L1 data cache on security domain transition

Disclosing information through the use of L1TF requires sensitive data from a victim security domain to be present in the L1 data cache (note, the L1D is shared by all LPs on the same physical core). This means disclosure can be prevented by flushing the L1 data cache when transitioning between security domains. To facilitate this, Intel has provided new capabilities through a microcode update that supports an architectural interface for flushing the L1 data cache.

Beginning with the August, 2018 Windows security updates, the Hyper-V hypervisor now uses the new L1 data cache flush feature when present to ensure that VM data is removed from the L1 data cache at critical points. On Windows Server 2016+ and Windows 10 1607+, the flush occurs when switching virtual processor contexts between VMs. This helps reduce the performance impact of the flush by minimizing the number of times this needs to occur. On previous versions of Windows, the flush occurs prior to executing a VM (e.g. prior to VMENTRY).

For L1 data cache flushing in the Hyper-V hypervisor to be robust, the flush is performed in combination with safe use or disablement of HyperThreading and per-virtual-processor hypervisor address spaces.

For SGX enclave scenarios, the microcode update provided by Intel ensures that the L1 data cache is flushed any time the logical processor exits enclave execution mode. The microcode update also supports attestation of whether HT has been enabled by the BIOS. When HT is enabled, there is a possibility of L1TF attacks from a sibling logical processor before enclave secrets in L1 data cache are flushed or cleared. The entity verifying the attestation may reject attestations from a HT-enabled system if it deems the risk of L1TF attacks from the sibling logic processor to not be acceptable.

Safe scheduling of sibling logical processors

Intel’s HyperThreading (HT) technology, also known as simultaneous multithreading (SMT), allows multiple logical processors (LPs) to execute simultaneously on a physical core. Each sibling LP can be simultaneously executing code in different security domains and privilege modes. For example, one LP could be executing in the hypervisor while another is executing code within a VM. This has implications for the L1 data cache flush because it may be possible for sensitive data to reenter the L1 data cache via a sibling LP after the L1 data cache flush occurs.

In order to prevent this from happening, the execution of code on sibling LPs must be safely scheduled or HT must be disabled. Both of these approaches ensure that the L1 data cache for a core does not become polluted with data from another security domain after a flush occurs.

The Hyper-V hypervisor on Windows Server 2016 and above supports a feature known as the core scheduler which ensures that virtual processors executing on a physical core always belong to the same VM and are described to the VM as sibling hyperthreads. This feature requires administrator opt-in for Windows Server 2016 and is enabled by default starting with Windows Server 2019. This, in combination with per-virtual-processor hypervisor address spaces, is what makes it possible to defer the L1 data cache flush to the point at which a core begins executing a virtual processor from a different VM rather than needing to perform the flush on every VMENTRY. For more details on how this is implemented in Hyper-V, please refer to the in-depth Hyper-V technical blog on this topic.

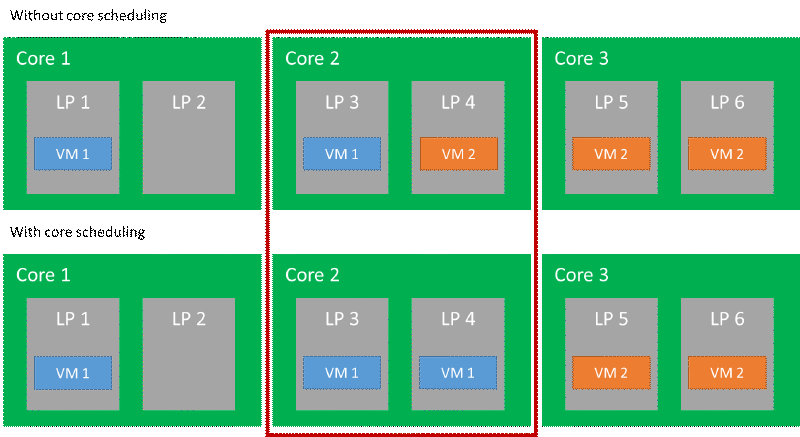

The following diagram illustrates the differences in virtual processor scheduling policies for a scenario with two different VMs (VM 1 and VM 2). As the diagram shows, without core scheduling enabled it is possible for code from two different VMs to execute simultaneously on a core (in this case core 2), whereas this is not possible with core scheduling enabled.

On versions of Windows prior to Windows Server 2016 and for all versions of Windows Client with virtualization enabled, the core scheduler feature is not supported and it may therefore be necessary to disable HT in order to ensure the robustness of the L1 data cache flush for inter-VM isolation. This is also currently necessary on Windows Server 2016+ for scenarios that make use of Virtual Secure Mode (VSM) for isolation of secrets. When HT is disabled, it becomes impossible for sibling logical processors to execute simultaneously on the same physical core. For guidance on how to disable HT on Windows, please refer to our advisory.

Removing sensitive content from memory

Another tactic for mitigating speculative execution side channels is to remove sensitive content from the address space such that it cannot be disclosed through speculative execution.

Per-virtual-processor address spaces

Until the emergence of speculative execution side channels, there was not a strong need for hypervisors to partition their virtual address space on a per-VM basis. As a result, it has been common practice for hypervisors to maintain a virtual mapping of all physical memory to simplify memory accesses. The existence of L1TF and other speculative execution side channels has made it desirable to eliminate cross-VM secrets from the virtual address space of the hypervisor when it is acting on behalf of a VM.

Beginning with the August, 2018 security update, the Hyper-V hypervisor in Windows Server 2016+ and Windows 10 1607+ now uses per-virtual-processor (and hence per-VM) address spaces and also no longer maps all of physical memory into the virtual address space of the hypervisor. This ensures that only memory that is allocated to the VM and the hypervisor on behalf of the VM is potentially accessible during speculation for a given virtual processor. In the case of L1TF, this mitigation works in combination with the L1 data cache flush and safe use or disablement of HT to ensure that no sensitive cross-VM information becomes available in the L1.

Mitigation applicability

The mitigations that were described in the previous sections work in combination to provide broad protection for L1TF. The following tables provide a summary of the attack scenarios and the relevant mitigations and default settings for different versions of Windows Server and Windows Client:

| Attack Category | Windows Server version | Windows Client version | ||

|---|---|---|---|---|

| Windows Server 2016+ | Pre-Windows Server 2016 | Windows 10 1607+ | Pre-Windows 10 1607 | |

| Inter-VM | Enabled: per-virtual-processor address spaces, safe page frame bits_Opt-in_: L1 data cache flush, enable core scheduler or disable HT | Enabled: safe page frame bits_Opt-in_: L1 data cache flush, disable HT | Enabled: per-virtual-processor address spaces, safe page frame bits_Opt-in_: L1 data cache flush, disable HT | Enabled: safe page frame bits_Opt-in_: L1 data cache flush, disable HT |

| Intra-OS | Opt-in: safe page frame bits | Enabled: safe page frame bits | ||

| Enclave | Enabled (SGX): L1 data cache flush_Opt-in (SGX/VSM)_: disable HT |

More concisely, the relationship between attack scenarios and mitigations for L1TF is summarized below:

| Mitigation Tactic | Mitigation Name | Inter-VM | Intra-OS | Enclave |

|---|---|---|---|---|

| Prevent speculation techniques | Flush L1 data cache on security domain transition | |||

| Safe scheduling of sibling logical processors | ||||

| Safe page frame bits in not present page table entries | ||||

| Remove sensitive content from memory | Per-virtual-processor address spaces |

Wrapping up

In this post, we analyzed a new speculative execution side channel vulnerability known as L1 Terminal Fault (L1TF). This vulnerability affects a broad range of attack scenarios and the relevant mitigations require a combination of software and firmware (microcode) updates for systems with affected Intel processors. The discovery of L1TF demonstrates that research into speculative execution side channels is ongoing and we will continue to evolve our response and mitigation strategy accordingly. We continue to encourage researchers to report new discoveries through our Speculative Execution Side Channel bounty program.

Matt Miller Microsoft Security Response Center (MSRC)