Introduction

Microsoft engineering teams use the Security Development Lifecycle to ensure our products are built in alignment with Microsoft’s Secure Future Initiative security principles: Secure by Design, Secure by Default, and Secure Operations.

A key component of the Security Development Lifecycle is security testing, which aims to discover and mitigate security vulnerabilities before adversaries can exploit them. Most enterprises have mature solutions in place for manual processes such as code review, penetration testing, and red teaming, as well as automated processes such as Static Application Security Testing (SAST). However, they often struggle with Dynamic Application Security Testing (DAST) when it comes to securing API web services. This is because while SAST tools can easily be integrated into web services’ CI/CD pipelines by a DevSecOps team without additional work from the services’ developers, DAST tools are not as simple to orchestrate.

In this blog post, we recap a recent BlueHat talk about the journey that Microsoft’s Azure Edge & Platform organization is on, using security automation to perform DAST at scale. This effort targets thousands of internal and external API web services across Microsoft’s portfolio of 1st party services, which are applications or services that are created, owned, and managed by Microsoft itself.

Why most enterprises have trouble scaling DAST

DAST is a software testing process that seeks to elicit security vulnerabilities or weaknesses by exercising the software at runtime. In the context of testing web applications and web services, this typically consists of sending malformed web requests via fuzzing or other means to identify bugs based on the web server’s responses to those requests. There are many excellent commercial and open-source DAST tools available for scanning web applications, including one from Microsoft named RESTler. Whether we want to scan web servers with a single DAST tool or a dozen, the tool needs to know the web endpoints and the web server’s endpoint logic needs to process its requests. It also requires access to a web service’s API specification and valid authentication credentials; neither of which are trivial to provide at scale in a secure way without manual effort from developers.

Web endpoint discovery

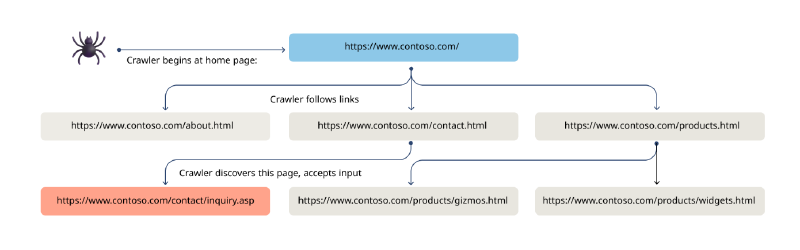

Historically, the only input required by a DAST tool was the target web server address to scan. A DAST tool would automatically crawl the given site, starting at the home page, and follow links embedded in the HTML to discover all the site pages. In doing so, the DAST tool would discover all dynamic functionality offered by the site, in the context of pages (or more generally, URLs) that accept input from the user.

In the image above, we can see that the DAST tool begins its crawl at https://www.contoso.com/, follows a link from the homepage to https://www.contoso.com/contact.html, and discovers a contact form on that page that accepts user input via URL parameters and/or a request body. The DAST tool can now send requests to the https://www.contoso.com/contact/inquiry.asp page with malformed values trying to discover security vulnerabilities in the handling of inputs on the dynamic page.

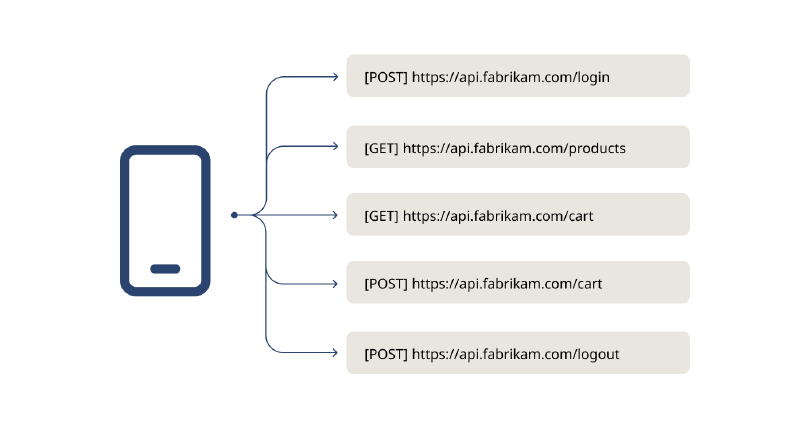

In general, this crawling approach still works reasonably well for traditional websites but cannot be used for web services whose functionality is solely exposed via APIs. Consider the diagram below, where a mobile app communicates with the REST API web service https://api.fabrikam.com/:

In this example, the mobile app has the REST API endpoints /login, /products, /cart, and /logout hardcoded into the app. When the DAST scanner tries to open https://api.fabrikam.com/, it gets an error.

To support this scenario, a modern DAST tool typically allows users to provide a list of endpoints as input via an OpenAPI Specification (sometimes referred to as a Swagger Specification). This specification enumerates a detailed list of a web service’s API URLs, all the parameters accepted by each API, and metadata describing the expected format of each parameter. OpenAPI Specifications can be generated by packages such as NSwag and swagger-core. Using these packages requires access to both the source code and the working build environment for the given service. This requires developers to manually integrate these solutions into the web service project, which could be a good approach for smaller organizations.

However, if an enterprise security team asked all of their company’s developers to integrate such solutions into their web service projects, they would certainly receive pushback from leadership and developers due to the massive cost of manually going through the process of updating each project’s code and build configuration.

Automated OpenAPI Specification generation solutions that do scale (sort of)

There are several techniques to automatically generate OpenAPI Specifications that don’t require manual per-service developer involvement.

One solution is to monitor requests sent to the target web server and extrapolate an OpenAPI Specification based on those requests in real-time. This monitoring could be performed client-side, server-side, or in-between on an API gateway, load-balancer, etc. This is a scalable, automatable solution that does not require each developer’s involvement. Depending on how long it runs, this approach can be limited in comprehensively identifying all web endpoints. For example, if no users called the /logout endpoint, then the /logout endpoint would not be included in the automatically generated OpenAPI Specification.

Another solution is to statically analyze the source code for a web service and generate an OpenAPI Specification based on defined API endpoint routes that the automation can gleam from the source code. Microsoft internally prototyped this solution and found it to be non-trivial to reliably discover all API endpoint routes and all parameters by parsing abstract syntax trees without access to a working build environment. This solution was also unable to handle scenarios of dynamically registered API route endpoint handlers. Microsoft also internally prototyped a variation of the static analysis solution that used an LLM to derive an OpenAPI Specification from source code. We saw some promising results, but unfortunately the solution was non-deterministic.

To truly scale DAST for thousands of web services, we need to automatically, comprehensively, and deterministically generate OpenAPI Specifications. Even with such a solution in place, we still need to ensure that our DAST tools can exercise the functionality of the tested services.

Authentication and authorization

Most enterprise web services require authentication, thus, to fully exercise the functionality of the web service, the DAST tool needs to provide an authentication credential. The web service also needs to grant authorization for the DAST tool to submit requests to the service. In testing environments, this would typically mean using a unique testing account per service or using a company-wide testing account and configuring the DAST tool accordingly. The former requires significant complexity in orchestrating the DAST tool’s execution per service, and the latter allows for a central point of compromise. Both approaches still require each service owner to manually make configuration changes. Similarly, with OpenAPI Specification generation, leadership and developers would likely push back on this massive cost.

A scalable DAST solution

As a Cloud Service Provider, Microsoft owns the IaaS and PaaS platforms on which our web services run and has the ability to generate inventories of our 1st party services and deploy custom security tooling onto those services. The Azure Edge & Platform organization, responsible for Microsoft’s edge computing and operating systems, is creating a solution to take advantage of this deployment capability by developing an agent that can be deployed to the non-production test instances of all our IaaS-based and PaaS-based web services. These non-production instances share the same code as the production instances. Performing DAST in non-production environments protects the fidelity of data in production environments. The DAST agent is one component of a larger DAST orchestration platform that obviates the need for access to web services’ build environments and the need to trouble developers with manually integrating new functionality into their services’ CI/CD pipelines. The agent has full access to the runtime state of the running web service’s process, giving it the ability to inspect memory and load new code into the running process.

Web endpoint discovery

A typical web server maintains a mapping of URL routes to route handlers to manage incoming requests. The agent leverages this architecture by inspecting the memory of the web server’s process at runtime to discover its route mappings and generates an OpenAPI Specification from the identified mappings.

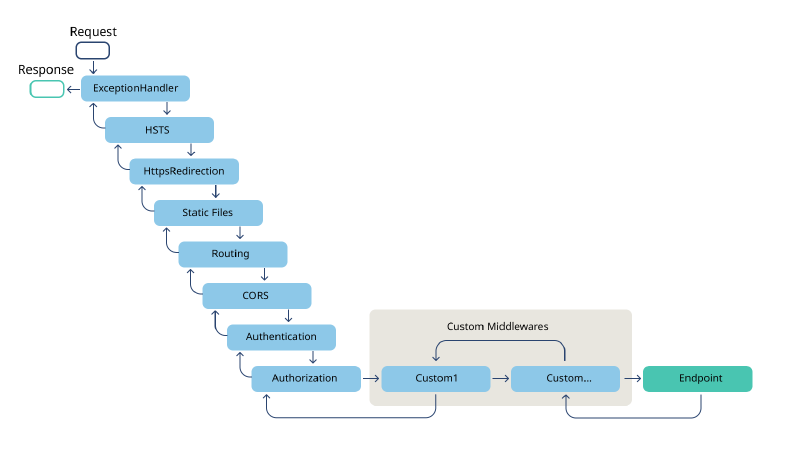

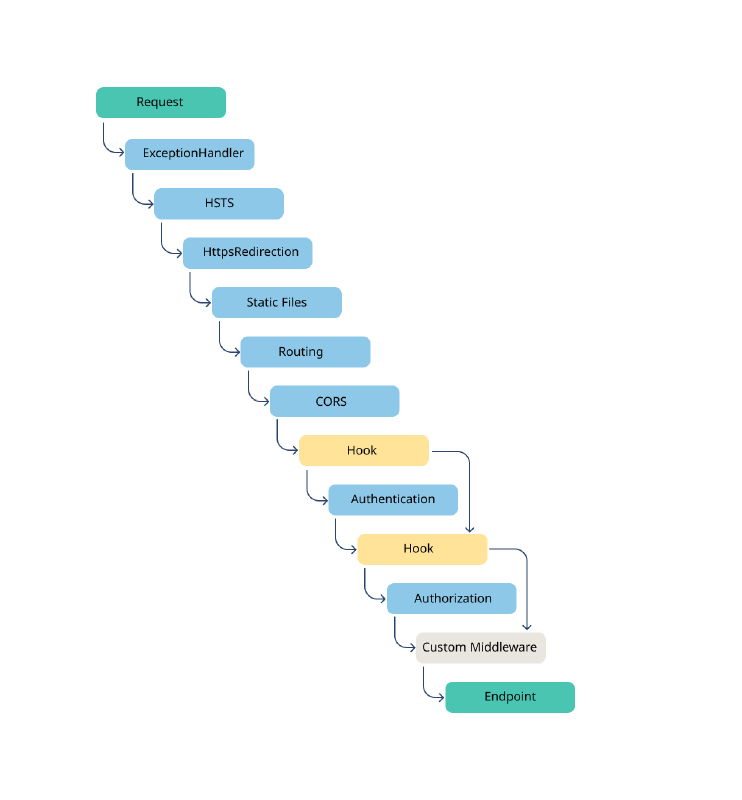

The vast majority of web services in our organization are written in ASP.NET, which uses a request handling pipeline to process incoming requests:

Each block in the diagram above is a middleware component that is given the opportunity to process the incoming request before handing it to the next component in the pipeline (or alternatively short-circuiting the remaining middleware components).

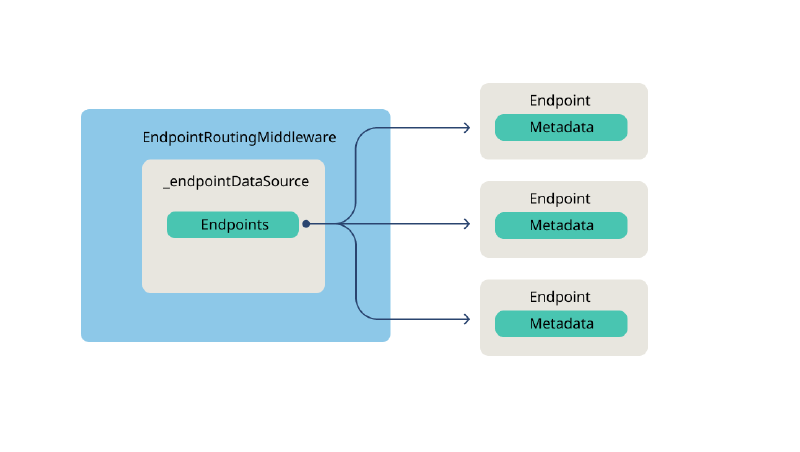

The last middleware component in the pipeline is the Endpoint component. Per the documentation for Microsoft.AspNetCore.Builder.EndpointRoutingApplicationBuilderExtensions.UseEndpoints(), “The Microsoft.AspNetCore.Routing.EndpointRoutingMiddleware defines a point in the middleware pipeline where routing decisions are made, and an Endpoint is associated with the HttpContext.” We examine the source code for Microsoft.AspNetCore.Routing.EndpointRoutingMiddleware and see that it contains a field named _endpointDataSource, which is a Microsoft.AspNetCore.Routing.EndpointDataSource object, which itself contains a property named Endpoints. Per the documentation, that Endpoints property is “a read-only collection of Endpoint instances”. Each of those Endpoint instances contains a Metadata collection that holds the details of the route endpoint.

Our solution for web endpoint discovery involves the agent automatically discovering the request handling pipeline in the web server process’s memory at runtime and following the object fields described above to find all Endpoint object instances. Automation in the agent then processes the metadata associated with each of those instances and uses Microsoft.OpenApi.Writers.OpenApiJsonWriter to dynamically generate a complete OpenAPI Specification for the web service, containing all API endpoints and their detailed parameters. That OpenAPI Specification can then be provided as input to a DAST tool to scan the target web service.

While the details above are specific to ASP.NET Core, the same approach of route discovery via runtime memory inspection could be applied to web services written in other frameworks like ASP.NET Framework, PHP, Node.js, etc.

Authentication and authorization

To overcome the challenges and risks of using testing accounts to allow DAST tools to send authenticated requests to web services, we can hook the Authentication and Authorization middleware components at runtime, eliminating the need for testing accounts altogether:

Our solution for authentication and authorization is a technique we call Transparent Auth. This involves inserting a new delegate before the Authentication component and before the Authorization component. Our agent does this by automatically traversing the request handling pipeline in memory and inserting new delegates at runtime.

While the Transparent Auth details are specific to ASP.NET Core, the same approach of runtime authentication and authorization hooking could be applied to web services written in other frameworks like ASP.NET Framework, PHP, Node.js, etc.

Authentication hook

The hook we inject before the pipeline’s Authentication component inspects the client’s request to determine if it is from one of our orchestrated DAST tools. We use an out-of-process component to track all requests originating from the orchestrated DAST tool, and our hook communicates with that component to verify that the request was indeed one that originated from the orchestrated DAST tool. If our hook determines that the request is not from the orchestrated DAST tool then it passes the request to the original Authentication component, which processes the request as normal.

On the other hand, if the hook determines that the request is from the orchestrated DAST tool, it has some extra work to do. It can’t merely skip over the original Authentication component because this could cause problems if other middleware components try to make use of the identity associated with the request. For example, imagine a web service that responds to authenticated requests with, “Hello, <username>” – if there is no username associated with the identity of the request’s context then this functionality would throw an exception. To address this, our hook creates a new ClaimsPrincipal and associates that ClaimsPrincipal with the current request’s context. The ClaimsPrincipal is comprised of a collection of Claim objects to flesh out the identity of the mock user, with values for the mock user’s name, email address, etc.

Once the request’s context is updated with this new ClaimsPrincipal, our hook skips over the original Authentication component and calls the request delegate immediately following the original Authentication component.

Authorization hook

The hook that we inject before the pipeline’s Authorization component starts off similarly to the Authentication hook. It verifies the client’s request is from our orchestrated DAST too, and passes the request to the original Authorization component if it is not.

If the hook determines that the request is from the orchestrated DAST tool, it makes a couple of small updates to the request’s context that would normally be done upon successful authorization. It then skips over the original Authorization component and calls the request delegate immediately following the original Authorization component, thereby circumventing the authorization check and allowing the service’s endpoint logic to process the request.

DAST orchestration platform architecture

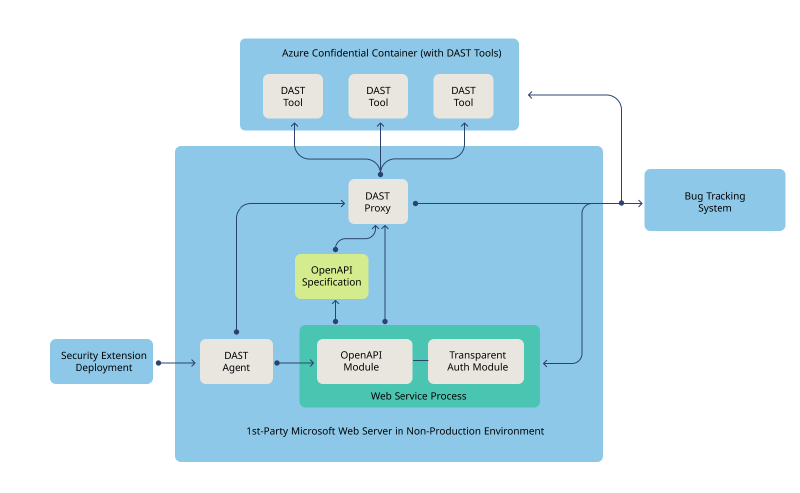

The functionality of the agent described above fits into the overall DAST orchestration platform architecture; illustrated at a high-level below.

Microsoft’s security extension deployment mechanism deploys the agent onto 1st-party Microsoft web servers. The agent then injects an OpenAPI Specification Generator Module and a Transparent Auth Module into each of the web service processes running on the web server. The OpenAPI Specification Generator Module generates the OpenAPI Specification for the web service and passes it to the DAST Proxy process, which was launched by the agent. The DAST Proxy starts a remote Confidential Container, sends the OpenAPI Specification to the container, and runs orchestrated DAST tools in the container based on the supplied OpenAPI Specification.

We use Confidential Containers for several reasons. First, their Trusted Execution Environments ensure that if an attacker were to compromise Azure’s Container Instances service, they wouldn’t be able to tamper with the code or data in our containers. Confidential Containers also enable the agent to verify the attestation report of the container with which it’s communicating to ensure that the container was built from the official DAST container image and that the security policy applied to the container is the official DAST container security policy. We use one container per target web service, and each container operates in its own pod, ensuring the DAST containers can’t communicate with each other. Each pod is firewalled to prevent outbound connections and to ensure that inbound connections are only allowed from the agent that launched the pod’s container. This confluence of protections work together to prevent an attacker from circumventing authentication or authorization checks, impersonating one of our orchestrated DAST tools, or discovering DAST scan results.

The DAST Proxy proxies requests from the DAST Tools to the target Web Service Process. The Transparent Auth Module intercepts each request and validates the request with the DAST Proxy. If the DAST Proxy confirms that the request is from an orchestrated DAST Tool then the Transparent Auth Module skips the standard authentication and authorization checks and allows the endpoint components to process the request. The DAST Proxy then proxies the web service’s responses back to the DAST Tools. Once the DAST Tools complete their scans, the DAST Proxy collects the results and sends the security findings to a centrally managed bug tracking system. Those centrally collected security findings can then be handled by the service owner, their security assurance team, and any associated risk management teams.

Conclusion and looking ahead

In the coming months, Microsoft’s Azure Edge & Platform organization will continue to develop and roll out the agent to 1st-party services. We will also explore leveraging the agentic architecture to support more advanced DAST-related concepts such as code coverage monitoring and associating DAST security findings to the vulnerable code blocks. We’re excited about opportunities to converge and deduplicate findings across multiple DAST tools and to use AI to identify false-positives and determine risk confidence.

In conclusion, this work underscores Microsoft’s dedication to securing our services and safeguarding our customers’ data, highlighting our security leadership in the hyperscaler space. We hope that it inspires security professionals outside of Microsoft to adopt similar strategies to secure their own services at scale.

Jason Geffner

Principal Security Architect